Fractal Define 7 48TB NAS Build

Introduction

I am moving my Unraid NAS from a dedicated rack case to a new, more conventional case. Here, I have tried to document my thoughts and progress.

Hardware

- Supermicro X11SSM-F

- Intel Pentium CPU G4560

- 8 GiB DDR4 ECC

- 2x Noctua NF-A8 PWM

- Noctua NH-U9S

- Inter-Tech IPC 4U-4408 - 4U

- LSI SAS 9217-4i4e

- Seasonic Prime Ultra Gold 550W

- 8x 8TB HDD WD80EMZZ

- 2x 500GB SSD 850 EVO

Sanity Check

| Inter-Tech IPC 4U-4408 | vs | Define 7 |

|---|---|---|

| Hot swap ✅ | - | ✅ Better HDD Temp |

| Backplane ✅ | - | ❎ 3.3v Pin Problem? |

| Simpler Cable Setup ✅ | - | ❎ Splitters / Many Cables |

| MAX 8 HDD ⏺️ | - | ⏺️ MAX ~13 HDD |

| 80mm Fans ❎ | - | ✅ 120mm Fans |

| Loud ❎ | - | ✅ Noise Dampened |

| Rack mounted ✅ | - | ❎ Way to big |

1. Hot swap vs Better HDD Temp

Well, the nice thing about HDD cages with hot-swap capabilities is that you can exchange an HDD without taking the system apart or even having to shut it down. Before anyone comments on the fact that you can also hot-swap normally attached SATA drives... well, yes and no. First off, it's way too cramped inside the case to even try to pull this off without accidentally pulling the wrong cable.

Then there is the problem that some motherboards really do not understand what you just did and will not recognize the newly plugged-in HDD. You can somewhat solve this by using a server board like my Supermicro one, but this hassle is not worth it, in my opinion.

I understand that if you need 100% uptime, shutting down a system is not a viable option, but in most home users' cases, this should not be a problem.

2. Backplane vs 3.3v Pin Problem

Okay, so my thoughts on this are as follows: most backplanes will run shucked drives without problems. However, if you attach them directly to a normal power supply via a SATA power cable, and you are (unlucky enough) to have an "Enterprise" HDD, it will not spin up. Now, you can cut off the pin or tape it over, but it's still a bit of a hassle, and if you don't already know about this, you will be dumbstruck.

On the other hand, if the backplane breaks, you are somewhat out of luck. At least for my Inter-Tech IPC 4U-4408 - 4U, I cannot find any reliable spare backplane. I could try contacting support or, in the worst-case scenario, buy the same case again, but the aftermarket support is really lackluster.

3. Simpler Cable Setup vs Splitter / Many Cables

Did you also notice that one of the main features a server/rack-mounted case offers is the backplane?

Well, regardless, wiring up a backplane is really nice. Most take Molex or SATA power and connect the drives via a breakout cable like the SFF-8087.

Buying these online can be hell because these cables are not bidirectional, so you have to find one with a good description, like this Reversed Cable Mini SAS 36Pin (SFF-8087) Male to 4 SATA 7Pin female Cable, Mini SAS (Target) to 4 SATA (Host) Cable, to ensure it will do what you want.

This makes wiring everything up so much easier. For my eight drives right now, I only needed two Molex cables and two of these SFF-8087 to SATA cables, and everything is neatly connected.

If I were to wire all this up in a normal case, I would currently need eight SATA data cables and eight SATA power cables. The biggest problem here is that these SATA connectors, especially on those 4x splitter cables, are either too far apart from each other or too stiff to fit nicely when trying to wire up all these disks. It is doable, no question, but all in all, it's way more work and messier than the backplane solution.

4. MAX 8 HDDs vs MAX ~13 HDDs

Size matters. If you have eight cages for hot-swaps, that is your limit. I am personally running 8 x 8 TB HDDs right now and would not increase this number; if anything, I would go with bigger and fewer drives if possible.

So yeah, it does not really matter. Of course, you can stuff way more HDDs into a case if you do not have to worry about hot-swaps.

5. 80 mm Fans vs 120 mm Fans

Like before, size matters!

Out of the box, the Inter-Tech IPC 4U-4408 - 4U can only handle 2x 80 mm fans in the rear as exhausts. You could DIY some fans under the HDD cage, but also nothing bigger than 80 mm. The problem here is that if you are running the server in a less-than-ideal environment (i.e., not in an AC-cooled server room), you will slowly start to cook the disks. In warmer months, the ambient temperature can reach around 30° - 35° Celsius.

This has led to HDD temps of around 50°C. Even in times when the ambient temperature was only around 20°C, the disks stayed around 40°C. Some like to argue that disks operate acceptably between 40°C - 50°C; if you look at the spec sheets, they will often even work up to 65°C. However, I really do not like these numbers. The CPU inside the case is colder than that! I would love for the HDDs to reach around 35°C. The only solution?

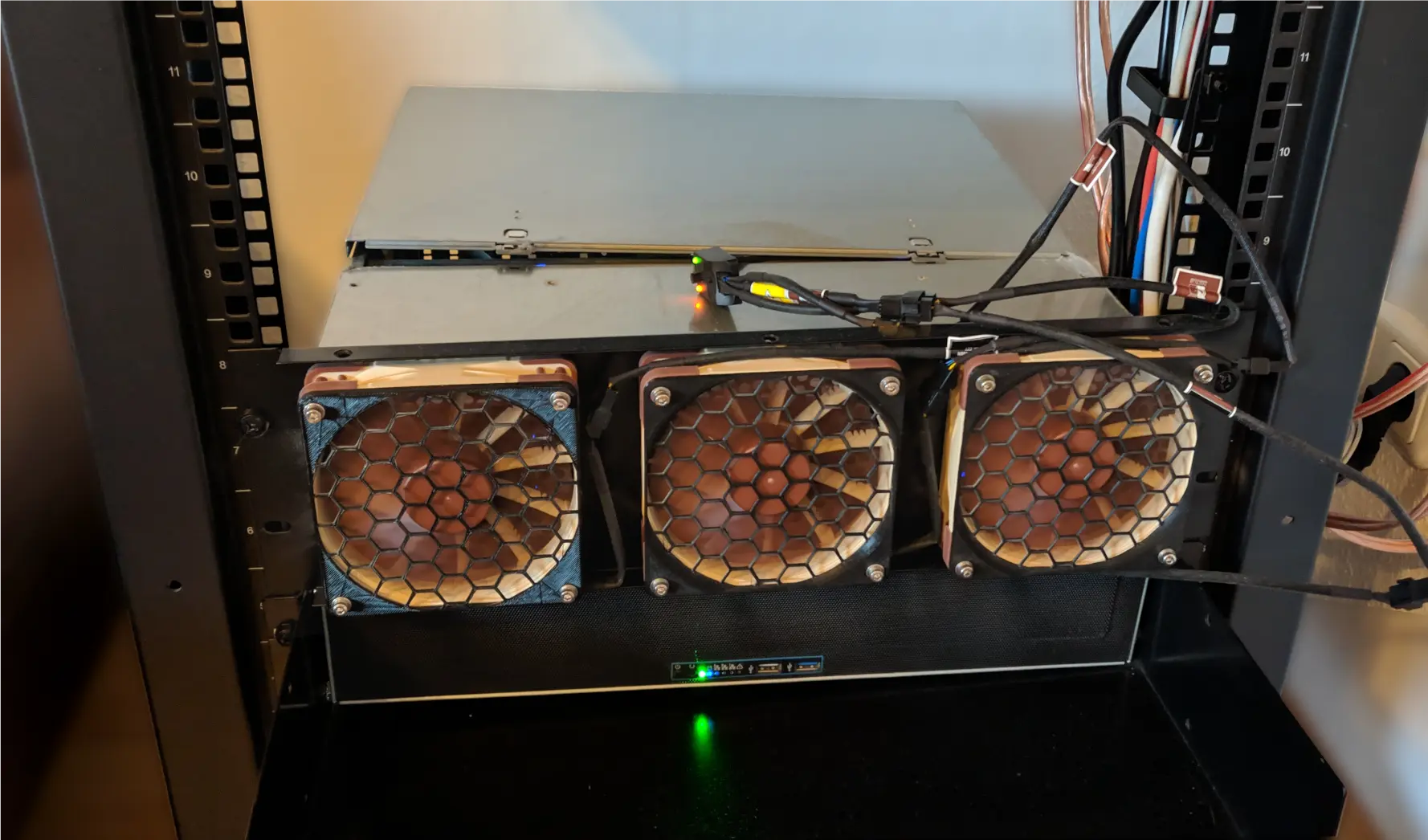

🌀🌀🌀FANS!🌀🌀🌀

That is how this ghetto-fixed solution came about.

- 3x NF-F12 PWM

- 1x NA-FC1

It works, though it's not the best, because I am basically blowing air against a wall and trying to force airflow between the tightly packed cages, HDDs, and backplane. This currently manages to keep the drives under 45°C in the hotter months. It's not perfect, but it was at least a stop-gap solution.

This leads us to the next point!

6. Loud vs Noise Dampened

Unsurprisingly, this is loud. The fans need to run at high speed, so even with Noctua fans, you can still hear them at 50% capacity. Sound-wise, the whole blowing-against-a-wall setup is also not helping.

Did you ever notice how silent laptops and computers became once SSDs existed and became more common? Well, you learn to appreciate it when you hear 48 HDDs spinning and clicking at the same time. Okay, my case is not that bad with only eight HDDs, but it is the loudest piece of equipment in my living room.

I may sound like a broken record, and you will probably think, "No shit, Sherlock!" but these server cases are really not designed to be in the same room as you. However, here we are; I do not have any other option. The hope is that the Define 7, with its dampening and better airflow design, will not only reduce the temperature of the disks but also the noise level.

Will this work out? Well, I fucking hope so. The cooling will be 100% better because the drives will have more room, and the fans will spin more freely. Combined with the wider possibility of mounting up to nine fans, there are way more options to create a good heat funnel.

7. Rack mounted vs Way to big

This is a chronic disease: I want to rack-mount everything, but not everything wants to be rack-mounted, or it will be extremely expensive, custom-made, or suck ass in comparison with non-rack-mounted solutions, like server cases... they are expensive, big, and suck.

I have always wanted to transplant my gaming PC into one of these, but they all suck. Just to get this out of my system.

- No fans or only 80 mm shit, with luck 120 mm*

- CPU cooler clearance means 4U high server and even then you can not mount any big boys because most clearance is only around 155 mm

- Heavy as fuck

- Either lay that thing on a rack tray or you need to buy rails

- Way too fucking long for most use cases as a gaming pc.

*There are special cases, like these cases, but they are mostly designed for liquid cooling because, again, there are not really any exhaust fans or any other way to mount fans than the three in the front.

I mean, look at my current setup...

Okay, I only have a 2-post rack, but there is no chance in hell I can get this mounted in it. So, this point should have been neutral. The biggest impact would be that there are only a handful of cases that you could lay on their side to fit inside a 19-inch rack, and those have their own limitations. This means that I will lose a lot of mounting space the moment I add the Define 7, which is 475 mm in height.

New Build Time 🔥

Yeah, we are doing this. I reused most of the components that I already had, except that I upgraded the RAM to 16 GiB, mostly because I found the RAM randomly on eBay. Otherwise, it was somewhat hard to find an identical stick, and my guess is that it will only get harder because it is EOL.

Hardware

- Supermicro X11SSM-F ♻️

- Intel Pentium CPU G4560 ♻️

- 16 GiB DDR4 ECC (KVR21E15D8K2/16) 🆕

- 3x Noctua NF-F12 PWM ♻️

- Noctua NH-U9S ♻️

- Fractal Design Define 7 PCGH 🆕

- LSI SAS 9217-4i4e ♻️

- SilverStone SST-CP06-E4 🆕

- Seasonic Prime Ultra Gold 550W ♻️

- Noctua NF-A4x20 PWM 🆕

- 8x 8TB WD80EMZZ ♻️

- 2x 500GB SSD 850 EVO ♻️

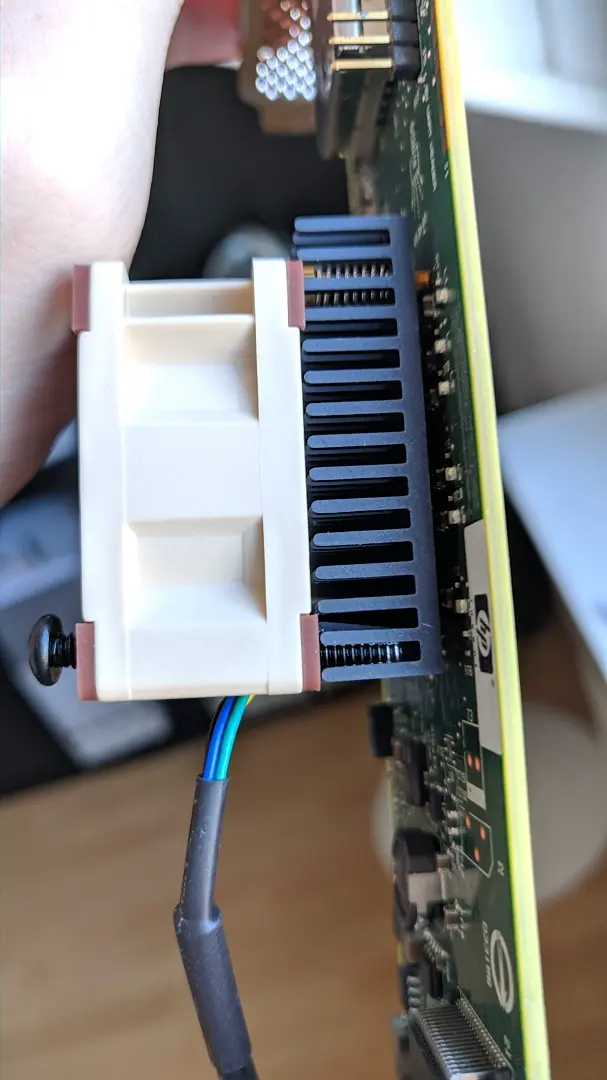

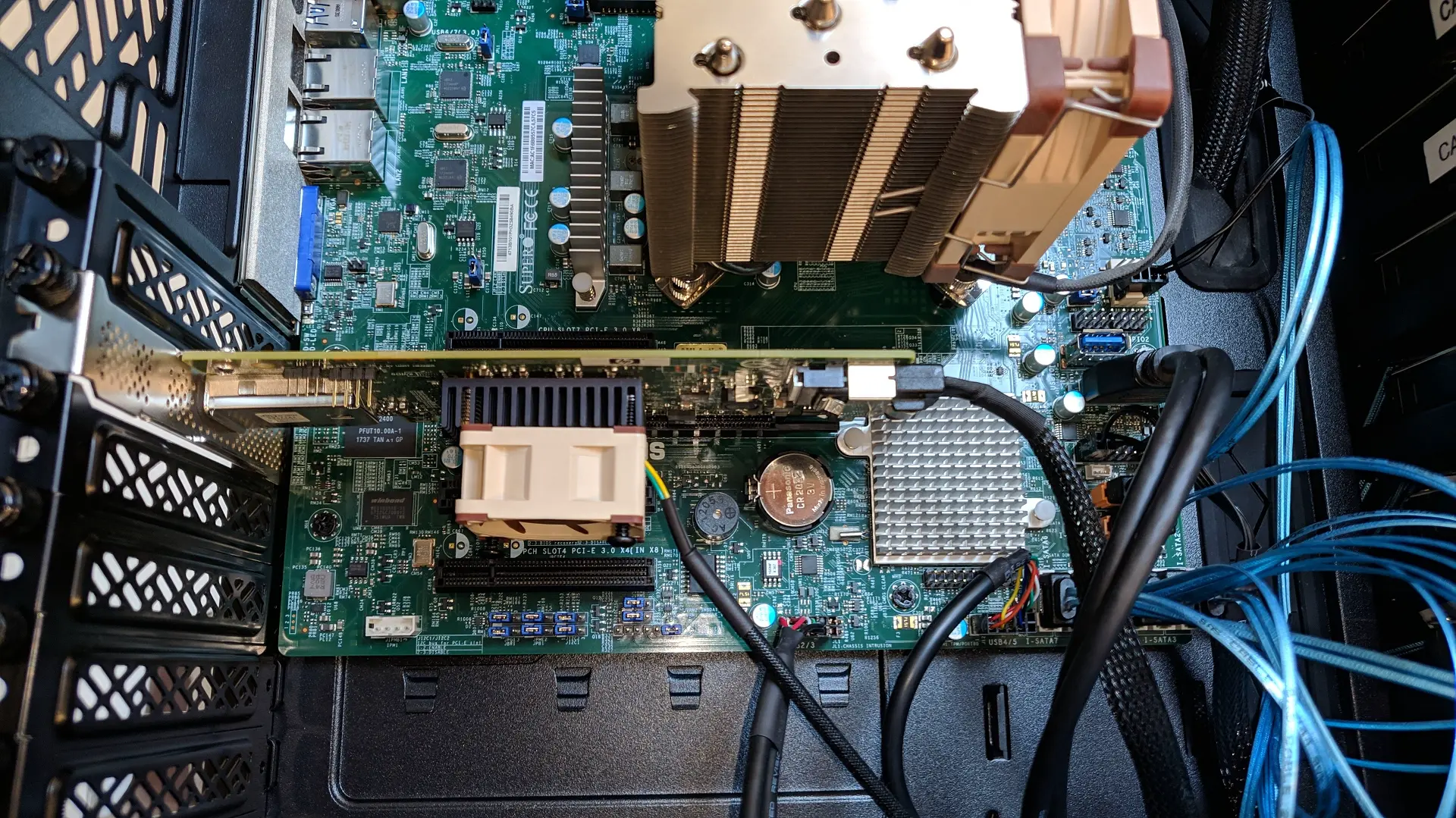

1. LSI SAS 9217-4i4e Cooling

It's not the biggest problem in the world, but when you are redoing a system, why not fix or improve something?

These RAID cards like to get hot, mostly because they normally expect to be run in a server environment. So, how do we fix this?

🌀🌀🌀Right more FANS!🌀🌀🌀

The hardest part was finding screws that were long enough and would fit between the heat sink fins. Then, I just started screwing. In my defense, I could have cut the screws to the appropriate length, but I was lazy. This still holds very tightly and does not move.

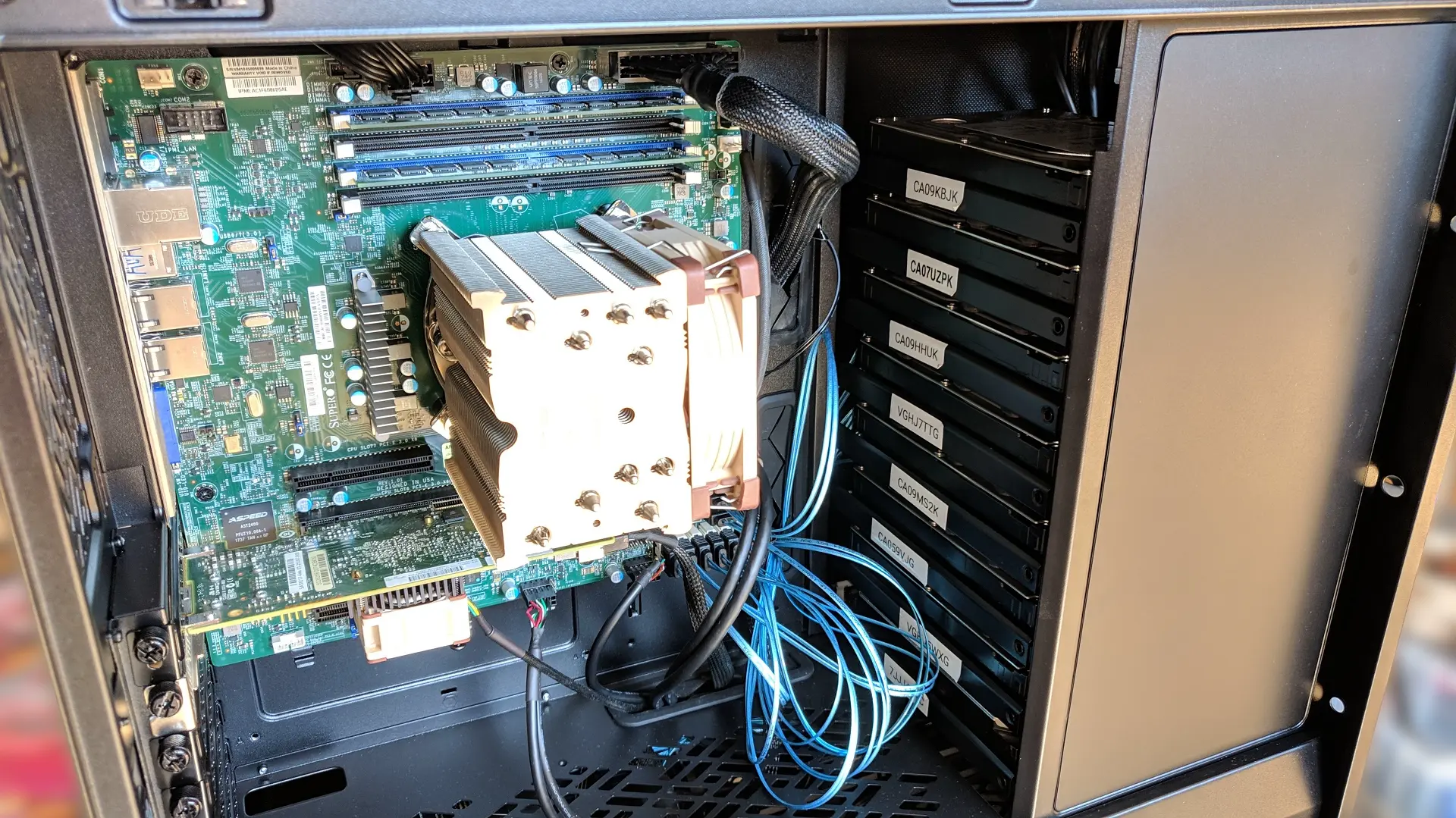

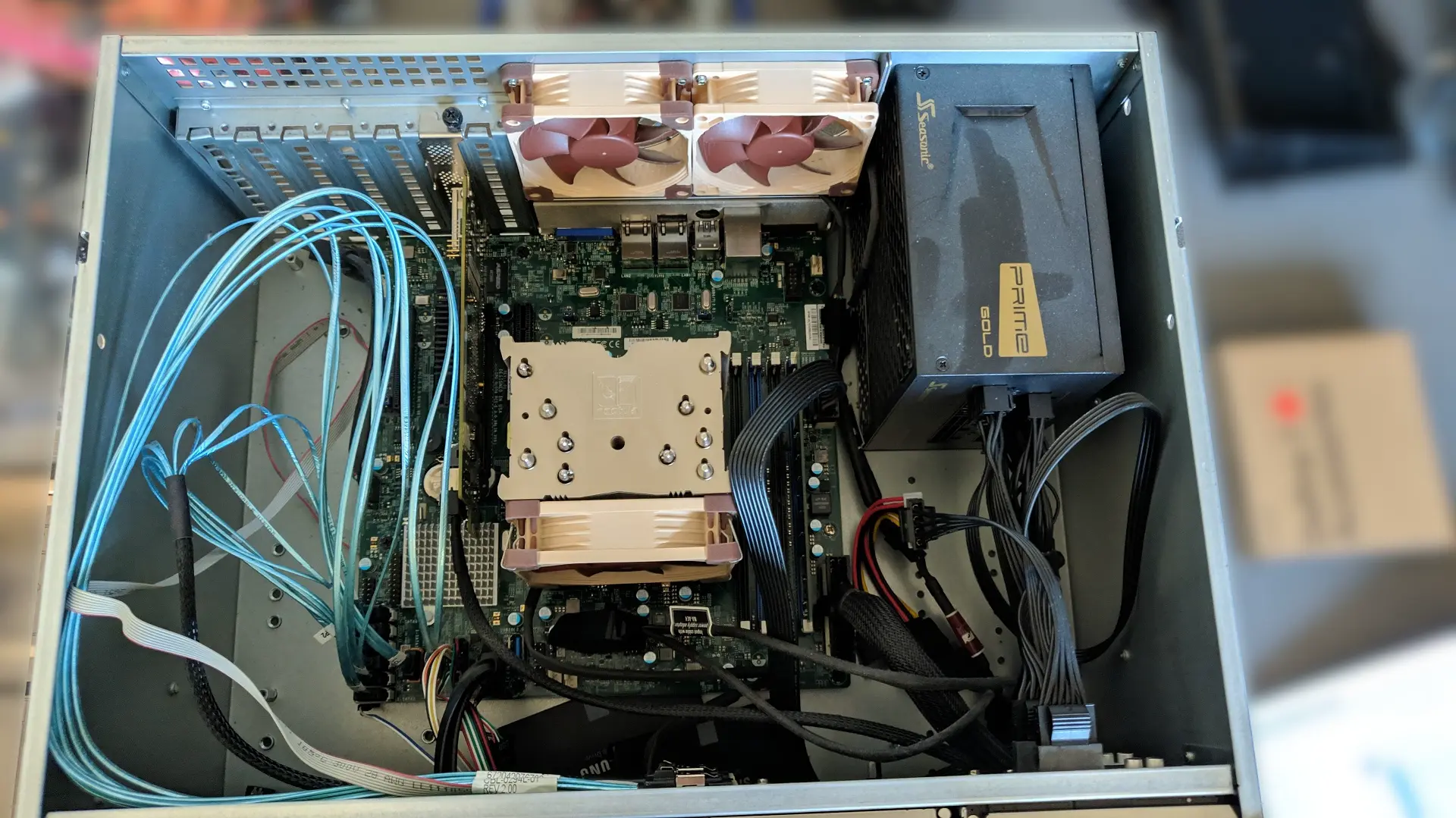

2. Setting everything up

The rest of the build went smoothly. First, I replaced the 3-pin fans at the front with my own Noctua NF-F12 PWM. I like these anti-vibration pads from Noctua, but with this slot mounting solution, they do not really have any grip, so they basically all stack on top of each other.

I then installed the motherboard and started to route the cables down to the power supply. After this, all fans and the front panel I/O were connected. At last, I inserted all data HDD drives and SSD cache drives.

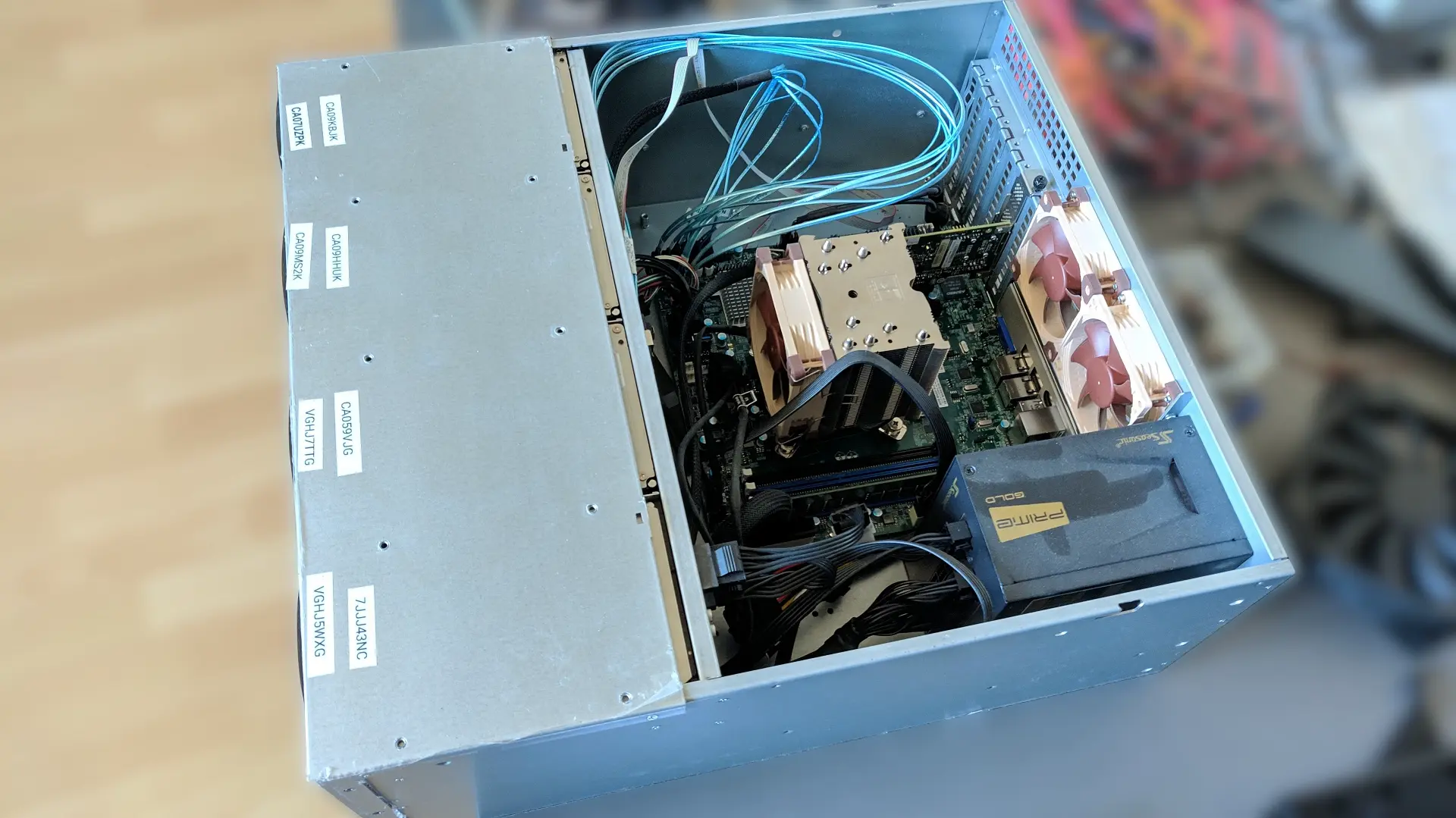

I looked for a better place to keep track of which drive is located where and could not really find any better location than on the side of the HDD trays. So, if I ever have to replace one and forget where it was, I will have to open both sides of the case to find it. Well, that's not too bad, but I would have liked a way to mark them on the connector side.

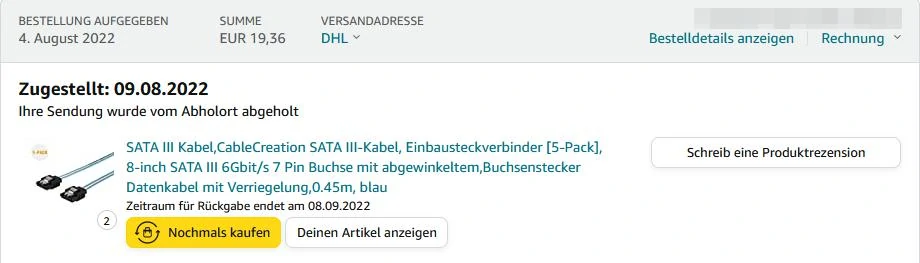

At least I managed to mark the SATA ports according to their layout. The real MVPs here were the SilverStone SST-CP06-E4 Adapters; they helped so much with easily connecting all the SATA power connectors. The CableCreation SATA data connectors

were also really nice to work with.

(Fuck these; they were the reason HDDs were throwing CRC errors out of nowhere. Back to normal SATA cables. Also, they changed the listing.)

Time for the first test run. Lucky me, all disks showed up without any problems!

Everything looks good except... the fans, which are spinning at maximum RPM.

I actually encountered this problem the first time I set up this server. You can control the fans via the IPMI interface, but the Supermicro X11SSM-F has preconfigured fan thresholds that are somewhat useless for "normal" fans. They either run at 100% or, in other modes, spin down but become so slow that the motherboard thinks it's a fan failure and spins all fans up to 100%. This then loops endlessly.

So, there are many ways to fix this now: redo the thresholds, set new fan speeds, etc. However, I was lucky and found this GitHub repo with a very nice script for Unraid that does all the heavy lifting and adjusts the fan speeds depending on predetermined values.

- Install Ipmitool

- Follow this guide unRAID-SuperMicro-IPMI-Auto-Fan-Control

What can I say? After some modifications, it works perfectly for me. I mostly had to change the PERIPHERAL FAN ZONE DUTY CYCLES and CPU FAN ZONE DUTY CYCLES because, in my case, any duty cycle under 0x32 (50% speed) caused the fans to again spin up and down wildly. I partly blame this on connecting all fans to the preinstalled Nexus fan hub and falling below the minimum RPM of the small Noctua fan.

So yeah, this IPMI fan control shit is always a pain in the ass, but once it works, you are set for life.

3. Conclusion

This is how it looks all together—well, somewhat blank, but exactly what I wanted.

Well, with this, the project is done. I like the new setup, and it is definitely way quieter than the old one. The only time I can now hear the system is when the fans spin up to 100%, but even then, it needs to be really quiet for me to notice.

The HDD temps are also way better now. With the fans now directly in front of the disks, plus the slight gap between them, a much-improved airflow is established. True, on the hottest summer days, the fans will now spin up to 100%, but this is not completely avoidable when your room reaches 35 degrees Celsius ambient temperature.

All in all, this project is now done!

Well, with this, the project is done! ✅

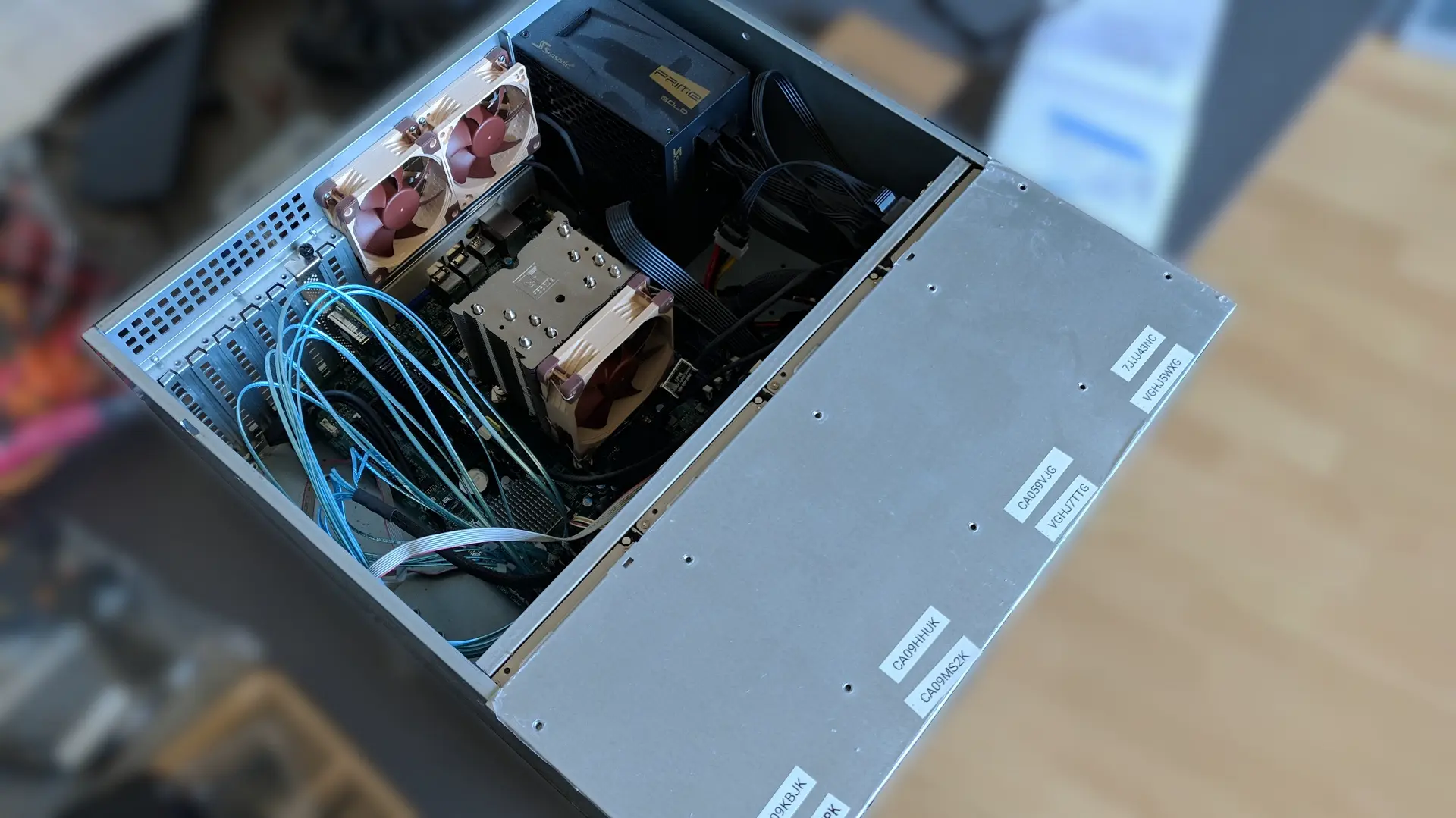

Old Build Images

New Build Images

Random Notes

- GitHub - kasuganosoras/SuperMicro-IPMI-LicenseGenerator: 🔑 SuperMicro 超微主板 IPMI 高级功能授权解锁 Key 生成工具

- Reverse Engineering Supermicro IPMI – peterkleissner.com

- Arctic 120mm PWM Fan HUB (Unused fan HUB because i used the included Nexus+ 2 and enough splitters.)

- SATA-Kabel für Prime und Focus (PSU Manufactures think they are funny and use different pinouts...so be careful what you buy and that the pinouts are the same / fits.)